C4 models allow us to visualize software architecture by decomposition in containers and components.

Viewpoints are organized in hierarchical levels:

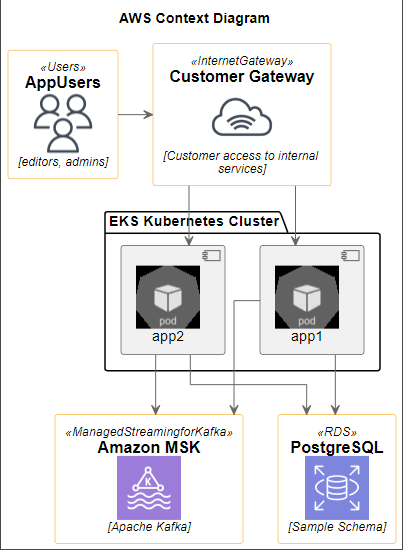

Context Diagrams (Level 1)

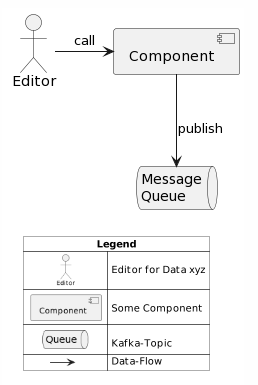

Container Diagrams (Level 2)

Component Diagrams (Level 3)

Code Diagrams (Level 4)

C4-PlantUML offers a variety of macros and stereotypes that make modeling fun.

An example in PlantUML:

sample.puml @startuml !include <c4/C4_Context.puml> !include <c4/C4_Container.puml> left to right direction Person(user, "User") System_Ext(auth, "AuthService", "Provides authentication and authorization via OIDC") System_Boundary(zone1, "Some system boundary") { System(lb, "Load Balancer") System_Boundary(az, "App Cluster") { System(app, "App Servers") { Container(app1, "App1", "Docker", "Does stuff") Container(app2, "App1", "Docker", "Does stuff") ContainerDb(dbSess, "Session DB", "Redis") ContainerDb(db1, "RBMS 1", "AWS RDS Postgres") ContainerDb(db2, "RBMS 2", "AWS RDS Postgres") ' both app servers sync sessions via redis Rel(app1, dbSess, "Uses", "Sync Session") Rel(app2, dbSess, "Uses", "Sync Session") ' both app servers persist data in RDBMS Rel(app1, db1, "Uses", "Persist/query relational data") Rel(app2, db2, "Uses", "Persist/query relational data") } } } Rel(user, lb, "call") Rel(lb, app1, "delegate") Rel(lb, app2, "delegate") Rel(app1, auth, "Verify", "User auth") Rel(app2, auth, "Verify", "User auth") SHOW_FLOATING_LEGEND() @enduml ...